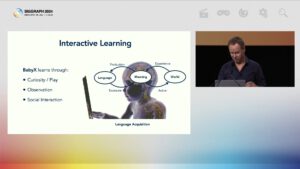

The world’s premier conference on computer graphics and interactive media began this year’s edition with a keynote on artificial babysitting. Siggraph 2024 introduced Baby X, one of the most complex and fundamental research programs at the intersection of AI and computer visualization.

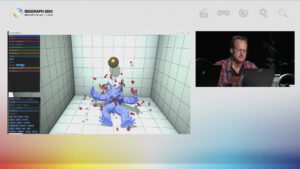

Visually, the project unveiled a highly detailed graphical representation of a human baby, from bone structure to body systems, including simulations of physics and friction within and between those body parts. The layers of artificial intelligence encompassed motor and sensory systems, behavioral states, and cognitive processes, all interwoven and activated by autonomous machine learning procedures. The goal is to evolve an artificial mind through system-inherited environmental awareness and stimulating social interaction—a creature emerging between animation and simulation that could become a role model for new ways to interact with technology.

The tone was set for AI to take center stage, and indeed, AI dominated every stage of the conference. Beyond image generation and avatar customization, a wide array of text-to-graphics applications showcased automated workflows for 3D modeling, animation, gameplay mechanics, virtual production features in film, games, and various digital media installations. Nvidia’s CEO even proclaimed that the entire conference had shifted from artful graphic programming to becoming an AI convention, underscoring his visionary disruption during a fireside chat with Meta’s CEO, one of the major clients of Nvidia’s AI framework product lines.

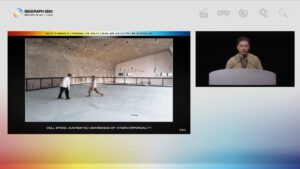

It might be tempting to view all the other program tracks at the Dallas convention as subtask research activities contributing to the education of Baby X. Art projects and immersive experiences transformed intuitive social and spatial relationships into datasets, only visible with head-mounted displays. Technical papers on scanning, capturing, and texturing technologies recreated digital environments and body parts. Voxel physics calculated the friction and consistency of virtual object behaviors and dissections. Stunning animation effects elevated visual perception into believable imagination. A (human) teenager even designed a virtual environment in an LED-CAVE installation using speech recognition to prompt an AI animation toolset. One could foresee Baby X growing into a full-fledged Holodeck system, simply by combining exponential AI advancements with increasingly realistic computer graphics. In theory.

In practice, however, the commercial chatbot generations based on Baby X research still look as uncanny as ever. HMDs remain cumbersome on the face. Animation effects are primarily limited to cinematics. Text-to-graphics generation can also be achieved on a Chinese laptop, including miniature models of the CEOs of Meta and Nvidia. And Siggraph has not yet been renamed to Siggraiph. The world’s premier conference on computer graphics and interactive media has managed to maintain a delicate balance between art and technology for more than half a century. By cultivating a community of wisdom and care, the education of Baby X is in good hands—both in theory and in practice.